During the information age, the age we currently live in, the internet has become synonymous with the concept of the world brain. The mind of the world has been pulled together into the messy archive of versatile forms of documentation that we all can conveniently access through search engines such as Google. If the internet is the World Brain, Google has become our most trusted brain surgeon or psychoanalyst. As the most used search engine, we trust Google to look into and interpret the collective information stored in the World Brain.

The beautiful thing about having such easy and convenient access as Google to the contents of the World Brain is that we do not have to remember anything. When asking a question from a friend an answer one might often get in this day and age is: Just Google it. The information is within our reach all the time, why would we have to remember anything when we have a pocket-sized encyclopedia within our reach constantly?

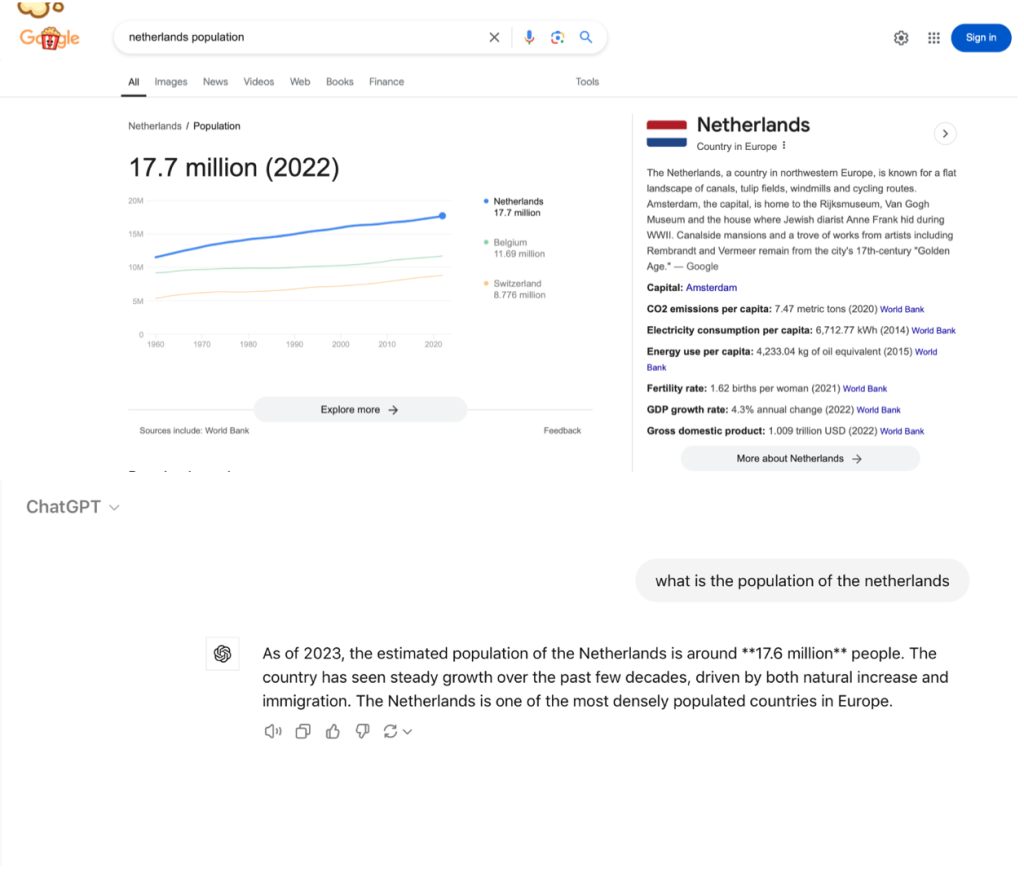

What people most often mean by just Google it is actually just search it on Google and check what pops up on the top of the page. People in general tend to have a blind trust in the first suggested source that Google suggests, especially if the information is presented inside a neat little box on one of the top corners of the page. Most often the information presented inside this box is just a quick summary of a Wikipedia article. Additionally, a well-known but often forgotten fact is that usually, the first source presented by Google has paid to be placed in this favorable position.

The trust that we have had in Google for a long time already seems to be changing to a similar trust we have in AI-based services such as ChatGPT. Based on my personal observations from the past few years, information and answers generated by AI tend to have a more trustworthy reputation when it comes to objectivity. ChatGPT generates an answer to the user’s question based on the information it finds from thousands of different sources online it sees fit with the context. The answer given by ChatGPT is based on what is said in these sources on average, and the average information from thousands of sources simply must be true. AI is almost treated as this magical higher power. The truth it tells us shall remain unchallenged. It is feared, and maybe even its creators. In reality, when we trust the services of the AI, we trust the human that programmed the AI. We trust that the human who originally created the service created it well enough so that a human mind is not needed to evaluate the multiple sources chosen by the AI. A (semi-)autonomically thinking digital entity sounds like something taken straight from sci-fi films and literature; it faces a lot of mistrust and the discourse surrounding it has a fearful undertone.

Why is the average seen as the truth, even when it comes to non-quantifiable information? Recently, I’ve noticed people around me ask ChatGPT a diverse set of questions with an increasingly lower threshold. Asking both quantifiable and more nuanced questions from ChatGPT has become a casual and nearly obvious part of functioning in everyday life. Occasionally, the answers to these more nuanced questions are opinion-based, nevertheless, consulting ChatGPT is seen as an easier option for creating a personal opinion through critical thinking. Utilizing Google and ChatGPT can be a good way to find factual answers to less nuanced questions. Although even quantifiable information can vary depending on the source, there is no problem with quickly Googling information such as the population of a certain country for example. Media literacy and critical thinking should be emphasized in education even more than before, especially in this age of great cultural and digital changes. The answers given by AI should be treated as media just as the content created by human beings are. H. G. Wells, the creator of the concept of the World Brain, had an extremely utopian vision for this world intellectual project. In his book World Brain (1938), he writes how “its creation is a way to world peace.” Despite the positive sides of the internet, our “Permanent World Encyclopedia”, the way humanity has used this World Brain during its relatively short existence seems to have contributed more to hate than to peace.

The images show an example of the earlier mentioned information box presented by Google and an answer generated by ChatGPT. These images demonstrate an example of a minor difference in between answers to the same question asking for simple quantifiable information. The difference stems from small changes such as choosing data from two different years.

Recent Comments