The other day, I asked my friends if they had heard of a particular TikTok trend — a sound that had been playing on repeat on my feed for weeks. Surely, I thought, it was everywhere. But they just stared at me blankly. None of them had any idea what I was talking about.

It was a strange moment of realisation. What I had assumed to be a global phenomenon was, in reality, just a microcosm of my own algorithmically curated world. Social media gives the illusion of connection, making us feel like we are part of a shared experience. In truth, what we see is deeply personal, dictated by an algorithm that reinforces what it thinks we want to see.

We assume digital media connects us to the world, but in reality, it isolates us into individualised realities. The question is: are we still making choices about what we see, or is the algorithm choosing for us?

The World We See Isn’t the World

H.G. Wells once imagined a World Brain—a utopian vision of a universally accessible, collective intelligence that would organise knowledge for the betterment of humanity. Today’s digital media landscape appears to be the realisation of this dream: endless information, instant access, a sense of belonging in online spaces. But instead of a unified World Brain, what we have is a fragmented digital consciousness, shaped not by human curiosity but by algorithms trained on engagement metrics.

Search engines, as discussed by Brin and Page in their foundational work on Google, were designed to organise information efficiently. But they do more than just retrieve facts—they shape what we know by ranking, filtering, and personalising results. What appears at the top of your search isn’t necessarily the most “true” or “important” information; it’s what the algorithm has determined is most relevant to you. The same principle applies across platforms. Every time we open our phones, we aren’t accessing the world—we are accessing a version of the world built specifically for us.

How Algorithms Narrow Our World

The irony is that digital media feels more personal than ever. Algorithms adapt to our preferences, recommending content that aligns with our tastes and values. This personalisation creates a sense of intimacy, making us feel like the internet understands us better than our closest friends. But in reality, it narrows our perspective. Instead of expanding our worldview, social media reinforces our existing biases, making it harder to see beyond our algorithmic bubble.

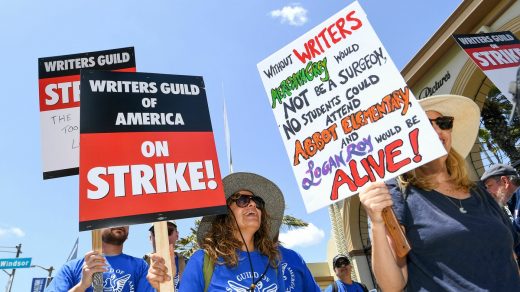

Think of the TikTok trend that lived only in my feed. What I thought was a global moment was just a reflection of my own digital reality. The same phenomenon plays out on a larger scale—political polarisation, cultural echo chambers, aesthetic trends that feel ubiquitous but are invisible outside our own feeds.

Who Controls What We See?

The problem isn’t just that our feeds are curated—it’s that they are curated without our input. We don’t choose what goes viral, what appears on our search results, or what recommendations fill our screens. These choices are made for us, based on data points we never consciously provide. And yet, we move through digital spaces as if we are in control, unaware of how much has already been decided before we even log on.

Wells’ World Brain imagined technology as a tool for democratising knowledge. But what happens when that knowledge is no longer freely chosen, but subtly assigned? The digital world offers infinite information, but it doesn’t guarantee autonomy. If we aren’t critically aware of how our feeds are shaped, we risk mistaking algorithmic determinism for personal choice.

The question isn’t whether digital media isolates us or connects us—it’s whether we even notice the difference anymore.

Recent Comments