“Hi, there! I’ll be here to answer any of your questions.”, reads a chatbot, including a photograph of a smiling employee on the side. Were you always convinced of these ’employees’ to be real? Yes, same for me here. Let me tell you: we were wrong. These ‘people’ are made by artificial intelligence companies who sell images of computer-generated faces. Companies who want to ‘increase’ their diversity, or dating apps that need women, can now create trumped-up models to achieve this. It seems ideal, right? But is it?

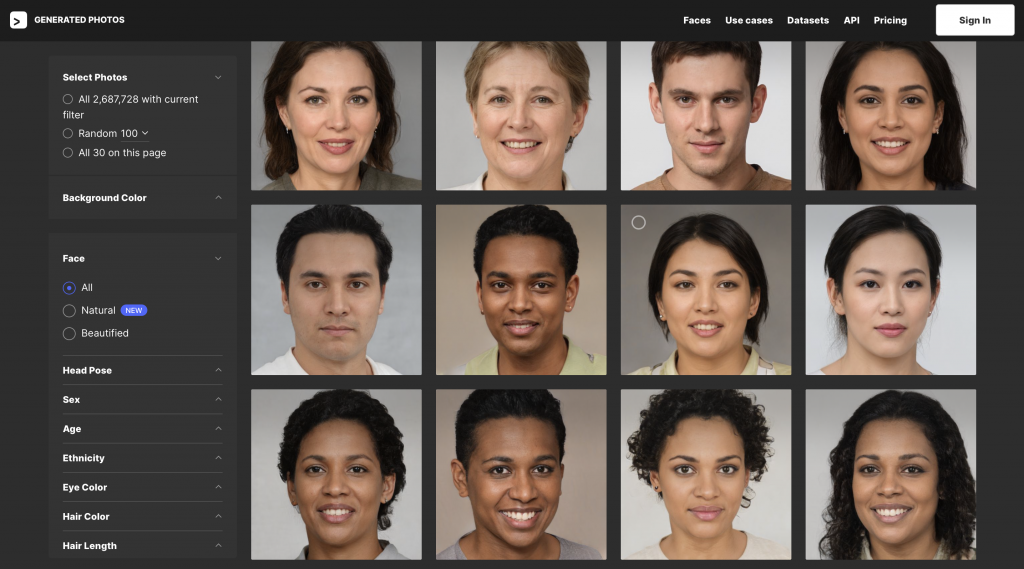

The video above is an advertisement for the website Generated.Photos. Here, companies can purchase a fake person for their enterprise for just $2,99. It is possible to adjust their appearances, like age, hair length, and ethnicity. Rosebud.AI provides a website where clients can create their own virtual characters. If clients prefer to, their animated creations can even have a customized voice and speak.

A generative adversarial network, a new type of artificial intelligence made it possible to fake faces. After uploading many pictures of real people in a computer program, the computer will study them and try to replicate their features in new portraitures. The system intends to make these fakes so convincing that they are able to mislead the human eye. It will also try to discover the ones are fake and which ones are real.

The New York Times created its own A.I. system in order to show how easy it is to generate such images. Basically, each face is seen by the A.I. system as a mathematical model. In order to adjust, for instance, the size of a nose, different values can be chosen. When a smile is preferred, the system generates a smiling and a non-smiling picture to use as the start and endpoints. Between these two standards, the smile can be accustomed.

As this A.I. system improves at a high rate, as in 2014 these generated faces looked like the Sims. The New York Times suggests, it is not odd to envision a future with whole collections of fake portraits. What could be a downside of this development, is that these faces empower new generations of people with agendas. Catfishers, scammers, and spies can now use a friendly face as a mask to online stalk and troll. The photos make it easy to create fake personas, disguise hiring biases, and counteract the diversity of a company.

This realization could be frightening as we often cannot distinguish the fake ones from the truth. Naturally, we assume these images are real and not created by computers. As Elana Zeide, a professor in A.I., law, and policy at Los Angeles’s law school, said: “There is no objective reality to compare these photos against. We’re used to physical worlds with sensory input.” Erosion of trust across the internet is already happening, yet, with this in mind, it will become even worse, as campaigns, media, and news can appear truthful when it, in fact, could be computer-generated.

As The New York Times beautifully stated, “Artificial intelligence can make our lives easier, but ultimately it is flawed as we are, because we are all behind it.” We often trust these systems but they can, just as humans, be imperfect and used with the wrong intentions.

Sources:

- generated.photos

- https://www.washingtonpost.com/technology/2020/01/07/dating-apps-need-women-advertisers-need-diversity-ai-companies-offer-solution-fake-people/

- https://www.washingtonpost.com/technology/2020/01/07/dating-apps-need-women-advertisers-need-diversity-ai-companies-offer-solution-fake-people/

- https://www.theverge.com/2019/9/20/20875362/100000-fake-ai-photos-stock-photography-royalty-free?fbclid=IwAR04GSt2MI1xJTB8nbW4xwZG8TFyD_g86HUCnvmk7DSEpsWHS0eZaoRadak

Thank you for raising such an interesting point with this post! I would have definitely not known that these pictures are fake, had I not read this blog. I think that this gained popularity of using A.I. generated pictures can be quite problematic. It can be problematic not only for the reasons that you have mentioned in your post but also because if companies start buying the of fake diverse people, then these opportunities are stripped away from minorities. I understand that this is a much cheaper solution for companies, but to think that the face behind this (dare I say performative) inclusivity is fake, makes it deeply problematic.

Thank you. I knew that there were some bots to answer general questions, however the ones with a picture and name I would have thought were real people. This is rather disturbing as you pointed out it makes way for more catfishing, stalking & trolling to happen a lot easier. Especially with the stalking if a photo that is pretty close to a real person, the real person could get into a lot of trouble in stalking cases.

Great blogpost! It is quite scary how many things can be done nowadays in this regard. Honestly I definitely would fall for any of these pictures. It also makes you think that people could maybe recreate your face and body without a problem. If we cant already we will be able to do that soon. It feels quite dystopian to be honest. However it does seem like this is the inevitable path computer generated images will take.

Great post! I find it super interesting when you bring up the issue of faking diversity, its something I hadn’t thought of before. I wrote about a similar topic and mentioned a CGI model agency, and most of their (fake) models were black, in order to add more diversity to the industry. I think this backfires a bit, since the CGI model or, in your case, the AI chatbot, could be seen as taking away the job of a real person that might be part of a minority group. Which, in the end, might perpetuate the problem rather than making the industry more diverse and inclusive. In the end though, many jobs are becoming automated or digital, and if AI chatbots are going to have a face representing them, does it matter if the face is dark, light, man, woman, or even a cat? I would actually love to chat with a “cat-bot”. Imagine having a cat explain to you how to troubleshoot something or ask if you’ve tried restarting your laptop. Would people be less angry with things not working then? Who could blame a cat?

Nice blog! In the beginning I thought the same but when I used such a chatbot with an image of a collegue on a certain web site during the night, they replied very fast. That realized me that there was something fake going on. It is scary that they can manipulate us easily by using these images of fictif persons. I personally prefer cartoons for computer robots and I think using fake images for dating platforms and things like that is a way of misleading people. Did you ever think about the fact that they want to verify that you, as a customer/user, are real while they create fake profiles by using fake images? Wow, that’s unfair! 😛