I was scrolling through TikTok, as I usually do, and sometimes you can come across actually insightful and interesting content. One of those videos came on my For You Page and it talked about how engaging with transphobic content can lead the user to get right-leaning, alt-right uploads recommended. While that side of the political spectrum generally is against supporting trans people, it is interesting how the TikTok algorithm puts that type of content together and categorises them to be similar, and recommends them to people. This can be generally harmful, as some people can get sucked into the world of ‘crypto-terfs’, and accidentally fall into harmful content territory.

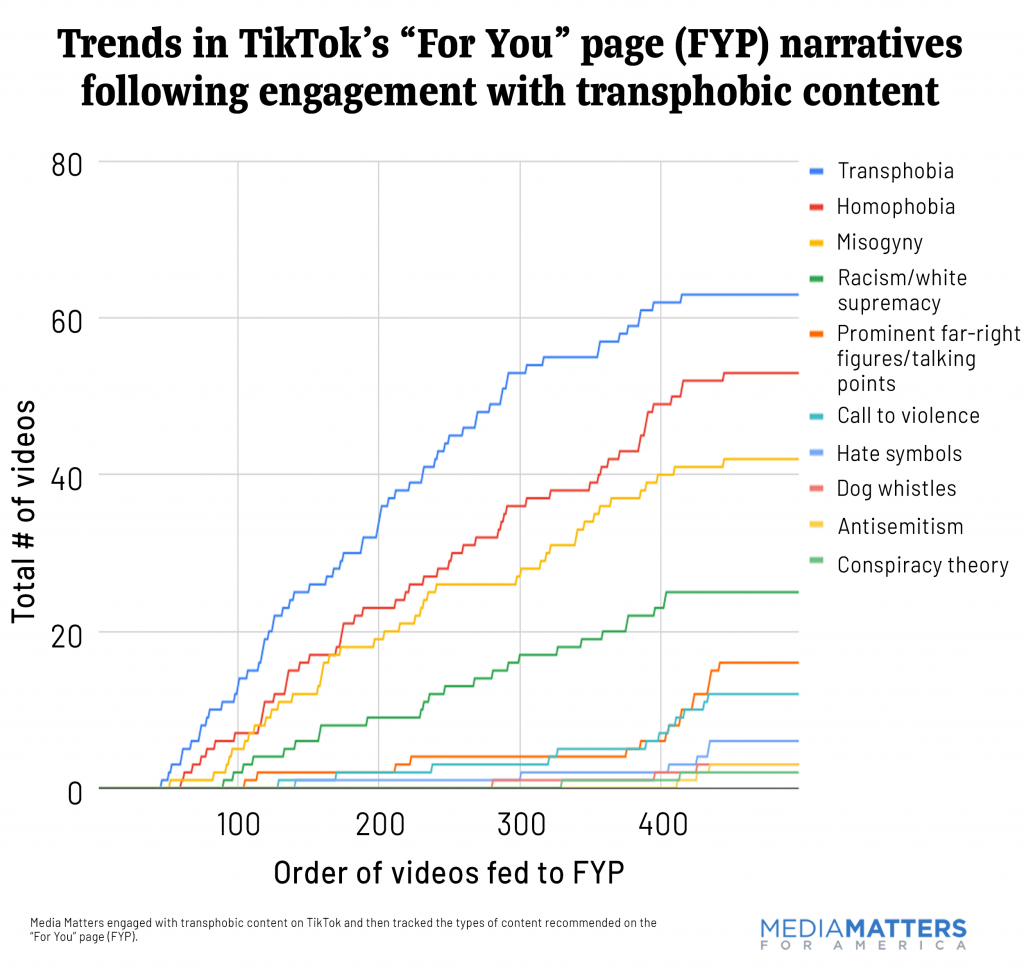

The first video I saw was from a creator named Abbie Richard, with the username @tofology. As of this moment, the video has about 2.5 million views, almost 600 thousand likes, and around 14 thousand comments. She describes the article that she co-wrote with Olivia Little, titled ‘TikTok’s algorithm leads users from transphobic videos to far-right rabbit holes’. It is a very interesting read, with a well-thought-out methodology with the aim to prove the hypothesis. The researchers demonstrated how transphobia is a gateway to the possible radicalisation of a social media timeline. The experiment featured making a brand new account and following known transphobic creators and interacting with content deemed as harmful. The first 450 videos on the account’s FYP were recorded and reviewed for their themes and topics.

The research’s findings include information that interacting solely with transphobic content leads to the recommended feed being filled with homophobia, racism, misogyny, white supremacist content, anti-vax videos, antisemitism, ableism, conspiracy theories, and videos promoting violence.1 To be more precise:

Of the 360 total recommended videos included in our analysis, 103 contained anti-trans and/or homophobic narratives, 42 were misogynistic, 29 contained racist narratives or white supremacist messaging, and 14 endorsed violence.

Olivia Little and Abbie Richards in ‘TikTok’s algorithm leads users from transphobic videos to far-right rabbit holes’2

The researchers found that the longer they scrolled and only interacted with transphobic content, they came across more and more violent videos, almost calling for harm to happen to LGBT people. A lot of those videos get hundreds of thousands of views, with a great number of ‘positive’ comments, supporting the creator. The article describes how the format of TikTok videos is almost perfect for easily spreading harmful content, with the combination of audio, video and text. The platform’s easiness and being able to post hateful uploads in a jokeful manner is the key to the big community concerning hate speech. The creators mention many individual examples of videos, promoting transphobia and featuring many far-right figures such as Nick Fuentes, Ben Shapiro, the previous leader of the British Union of Fascists Oswald Mosley or Paul Nicholas Miller among others.

This research also correlates with another video I came across on my FYP talking about how the TERF (trans-exclusionary radical feminist) pipeline is similar to the alt-right pipeline, with a young, impressionable person possibly being easily brought into such a harmful community. It can start with sympathy to the hateful beliefs and later fully immersing yourself within such a place. The TikTok creator with the handle of @gothamshitty or Lauren explains the term ‘crypto-terf’, which are people who hide their true beliefs or avoid talking about trans issues in general. She points to her own previous beliefs and that identifying hidden transphobic content is important when trying to educate yourself as a new feminist. Identifying or sympathising with possible terf content can lead to misinformation. She mentions an example when a particular creator talks about how ‘radical feminism is better than liberal feminism’, which can lead to a viewer assuming that those are the only options of being a feminist, breaking such a complex issue down to a simple one or two. Lauren then starts explaining the mechanic of the previously mentioned experiment. When a person decides to follow a creator, their recommended content can easily align with the videos that the creator engages with. By following a possibly harmful individual, a young, impressionable audience can fall into a dangerous rabbit hole.

This new way to access harmful content, through a high-speed, low attention span, can endanger many young people. Radicalisation of a feed like the FYP of TikTok can lead people to follow and engage with hate speech, violence and other awful things. It is a scary turn of events, how TikTok can have guidelines that do not allow ‘content that attacks, threatens, incites violence against, or otherwise dehumanizes an individual or group on the basis of’ things like a person’s gender and gender identity, and yet this type of content is not taken down but even promoted.

1.https://www.mediamatters.org/tiktok/tiktoks-algorithm-leads-users-transphobic-videos-far-right-rabbit-holes

2. Ibid.

Very relevant post, esp. since it was trans awareness week last week. I have to say that I’ve also started noticing this happening but with fashion and body shaming content on my own feed, since I like a lot of fashion content myself. As a tiktok user, I don’t really think about what I’m seeing when I’m mindlessly scrolling. But after reading your blog, I feel obliged to pay closer attention to how I’m feeding into or changing my algorithm.

Super interesting blog!

I don’t think the promotion of harmful content via algorithm is particularly new, as this also happens on social media platforms like Facebook, Instragram, and YouTube. Due to the size of both these platforms, it seems to take quite a while before content like this gets removed when it shows up, and by that time, it might already be too late as people might have already seen the content.

I’m personally not familiar with TikTok, but I do know that it has grown very fast, so they might have a similar problem or simply do not want to spend money on more efficient ways to quickly remove this type of content, which is extremely frustrating.

The algorithm is to blame in a way, as this is the reason why people can fall in a deeper and deeper rabbit hole. However, the algorithm is made to do exactly and only that; feeding you similar content to keep you browsing on the app as long as possible.

I definitely think the fault lies at the owners of the app themselves being either too greedy or too lazy to do more about the spreading of such harmful content. I hope more awareness will be created in the future so that these companies will have to work harder on removing such content.

Really great read! You explained the topic very well, I think. It’s definitely so important to be aware of the way the algorithm can lead you to extremities. I actually wrote a similar blog last week but about a different topic. But anyway, this is exactly why I often get annoyed when people’s excuse for a mild or seemingly innocent joke is that it isn’t such a big deal, because there’s always the risk that it can become a big deal. Even in cases where offensive content doesn’t seem extremely hateful, you’re right on the edge of being desensitized to what really is hateful. And I don’t think enough people realize that yet.

Really interesting post. Although I’m not on TikTok, I remember around 2016/2017 there being a big rise in SJW videos on YouTube. In my class, these videos used to be shared around and I’m ashamed to admit it but I used to find it entertaining to watch SJW cringe or triggered videos. I then was recommended Ben Shapiro and the like but luckily, I stepped back and I never fell deeper into this pipeline. Looking back, it’s scary to think about how this algorithm worked and seeing this now play out on TikTok is alarming. Although TikTokers like Abbie making people aware that this can happen is a start, clearly, TikTok needs to change their guidelines.