It’s become somewhat commonplace to see that strange mix of letters and numbers, or that new way of saying death. Murder is turned into unaliving someone, and death is turned into d34th. Users claim that doing so avoids being shadowbanned or pushed out of the algorithm. Some critics say that it can make the individual filtering of harmful topics more difficult. However, what are the effects, if any, of this method of communication?

History of Tiktok lingo

This terminology is not new to tiktok. They did not invent the replacement of various letters with numbers. Indeed leet or leetspeak, an earlier variation, has been around since the 1980s and has slowly gained traction online. While leetspeak was not primarily made to avoid content moderation, tiktok has amplified this and created a whole new subgenre of internet speak with the specific goal to avoid content moderation.

This type of internet speak has been coined as algospeak and is primarily used on social media platforms such as youtube and tiktok. AI moderation is used in these platforms to cast a wide net for topics such as racism, suicide, and explicit content with the goal of banning content that does not follow guidelines of the site. However, in the process of casting this net, the moderation bot will inevitably ‘catch’ other content which is not in violation of the guidelines. For example, videos talking about racism as a topic might be caught by this model and then result in the user becoming banned or getting a warning, or a video talking about a fictional character’s death might be forcibly taken down despite the fact that these are not the real targets fof the AI bot.

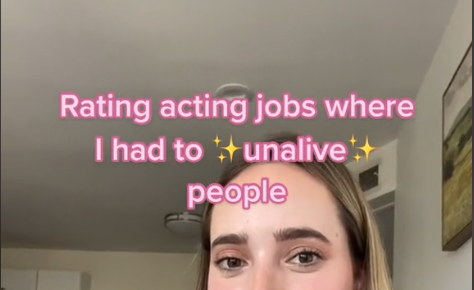

Source: A ‘harmless’ video that uses algospeak to avoid content moderation.

Algospeak, Tiktok, and its repercussions

However, algospeak has probably become more prominent due to the rising belief that certain other topics, such as LGBT topics and obesity, are also being screened in order to shadowban such content, hiding it from other users. This has come from the fact that content which openly mentions these topics seems to perform abnormally in comparison to other videos from the same user about different topics. As such, terms which are thought to be flagged, like lesbian, gay, and lgbtq+, are replaced with le$bean, g4y, and leg booty.

Because of the popularity of tiktok, this type of speech has become more prevalent across different sites. Even in spaces which have comparitively less AI moderation or less rules, such as reddit and twitter/X, terms like unalive have become more commonplace. While this is useful in sites which AI moderation does in fact catch non-violating content, and flags it as violating community guidelines, there are sites that rely on other ways to moderate their content. In places where some moderation can be done by filtering out content and terms that users personally do not want to see having all these terms meaning the same things can be harmful to users. The term ‘suicide’ can have multiple alternate terms such as sewer slide, unalive myself, sv!c!d3, and other variations. If someone wants to avoid having suicide mentioned on their site, they would have to put all these alternate terms as well as any new variations that will inevitably arise. It becomes exponentially more difficult to add these content and tag filters when multiple terms mean the same thing, which is harmful to the users of these websites.

Thus it seems that there is a balance to be struck. Either too many algospeak terms exist and do not allow individuals to set manual filters for content they find harmful, or algospeak is not used and people are not allowed to speak about certain topics without the risk of being shadowbanned by an AI moderation bot.

Sources:

From Camping To Cheese Pizza, ‘Algospeak’ Is Taking Over Social Media

‘Mascara,’ ‘Unalive,’ ‘Corn’: What Common Social Media Algospeak Words Actually Mean

The phenomenon of algospeak expanding beyond certain social media is so interesting to me. I can’t wait for the day when I hear someone say a word like ‘unalive’ in a real life conversation.

I imagine the use of algospeak could also be used maliciously, where people purposely circumvent filters and blacklists in order to put malicious content in view of certain people just to trigger them or upset them.

I personally have already heard some algospeak beyond social media, coincidentally the word I have a memory of hearing in a real life conversation is “unalive”.

This is very interesting indeed. I haven’t heard the term in real life conversations but I have heard them a couple times on YouTube videos (I mean not written but spoken), and I know this probably adds up to the content moderation and everything, but it just really striked me to actually hear the term and not just read it.

Algospeak has made me slightly uncomfortable since I first noticed it becoming more frequent. Censoring certain words through alternative spelling adds to the taboo and makes the discussion surrounding them even more difficult. Of course, it is understandable in cases where the actual word itself is been censored by the platform.

Yeah I think it can be quite nuanced since I can understand why it has risen in popularity considering the wide algorithm nets that people want to circumvent. However, if it is being used in a positive way to circumvent filters I am pretty sure it has to also be used in a harmful way to achieve the same result.

I imagine algospeak is there for a reason and helps to elevate the content that otherwise could be shadowbanned. But I am also a bit hesitant if it really works. For example, my “for you” page suggests quite some content on Palestine or lgbtq+ (well, that’s because I do engage basically only with that kind of content, purposefully) and at least when it comes to Palestine, a lot of people write p@lest1ne or something similar to avoid shadowban. However, the content still makes it on my feed, so my guess is that it is flagged as pro-Palestine content at the end. Which again, makes me wonder how efficient algospeak truly is.

This topic is super interesting to me as a linguistics student because it mirrors the real linguistic phenomenon of taboo speech, although the driving factor is something entirely different. Instead of a certain word being avoided due to it having an undesirable cultural meaning it’s instead content filters that drive this shift. It is also absolutely a real linguistic shift because people have started using this algospeak outside of the context of social media too. Very interesting.

I find your post very interesting because forms of algospeak can also lead to people finding new ways to bully people in comments. People can use ways to get around words the creator may have banned and hate on them in a new form. This can be extremely harmful since at times when someone gets cancelled, people will leave hundreds or even thousands of comments telling someone to ‘unalive’ themselves for example, and this gets around the algorithms in place to stop people from using words like that. It’s especially scary to also see how widespread this has gotten and how almost any word banned, often for a good reason, has a new way of saying it to bypass any preventative measures taken by the social media site.