After thinking a long while about my role online, I came to the conclusion that I am mostly an avid translator of what I consume on social media. Reading this blogpost on translation bias then triggered a trip down memory lane and I recalled my early days of using the translation service powered by Google.

Around 2008, I started venturing on the internet. At the time, and more than now, the main language encountered on the world wide web was English. Having no schooling in the language yet, I had to make do. At my disposal were no automatic page translators and I primarily relied on good old Google Translate – secondarily on my mom. After hours of retyping content as little me was alien to the concept of “ctrl+c” and “ctrl+v” I became frustrated at Google Translate’s poor performance at translating complex sentences – read sentences with subclauses. The limitations of the translator were well-known and even derided by many of my professors from primary school to university, until November 2016 that is. From that point onwards, Google Translate not only learned from its mistakes, but was able to translate whole paragraphs at a time. Why? How? Let’s find out.

Google’s Translate’s game-upping: from SMT to G-NMT

The answer comes from this announcement on the Google blog by Barak Turovsky. From this blog we know that from November 15, 2016 onwards Google started using a new system for translating languages. Google Translate departed from statistical machine translation to Neural Machine Translation.

Neat. But what does Statistical Machine Translation (SMT) mean and how does this differ with Neural Machine Translation (NMT)? The idea behind SMT (find it more in detail here) is to feed a computer with a large dataset of texts in two languages (think European Parliament documents for example). The computer will then analyze and compare all sentences – divided in word units at a first stage of the translation model – in both versions of the documents in order to find translation patterns. The computer will then use the patterns it has computed (seeing “arbre” translated as “tree” 3000 times for example) to translate the input in the target language with the most statistically significant data. This method of translating is useful, but knows limitations: as no humans are there to guide the computer in its calculations, word correlations were sometimes – a lot of times – dubious. Moreover, what happens with cases (nominative, genitive and so forth), word gender and homonyms? You guessed it, a lot of debugging.

What about NMT? The story starts with Google noticing this paper from 2014 in which the authors put forward a novel idea. Indeed, they trained two neural networks (more on this here and here) to act as a pair in order to encode a language and decode it in another language in order to improve SMT translations. One of the major take-aways here is that this type of machine translation does not rely on a central dictionary as a key source: in other words translation without intermediary is possible between languages that have no shared corpus. Flashforward to said announcement in November 2016: Google engineered its own NMT (creatively titled “Google Neural Machine Translation”) to train itself in a way that optimized translations will be generated over time. And indeed! Back when I started to study in September 2016, the translations from Chinese to English were not reliable even for a beginner’s understanding, to say the least. In 2018 when I had to peruse Chinese newspapers and make translations to Dutch, I relied on this translator to spare me long from blurting nonsense in class when a translation was needed (I apologize to my teacher).

A last tidbit: Google uses crowdsourcing to build its networks. Your feedback on translations is very much likely taken into account. This also means that what you type to translate can end up in the database to predict input, so watch out with sensible information!

Sources:

https://ai.googleblog.com/2016/09/a-neural-network-for-machine.html

https://arxiv.org/abs/1406.1078

You did such a good job writing this post, it was really interesting and I actually felt like I could relate to your experience as well! I still remember how my English teacher at school kept scolding everyone for using Google Translator because it simply didn’t make sense, whereas now it can actually help people communicate and expand their knowledge. Feels good to see it get better 🙂

Hi Renita! Thanks for your comment, glad you liked the article! I think that such an occurrence is a core memory to many of us. I for one, am guilty of using this tactic in high school for presentations in English with a short deadline.

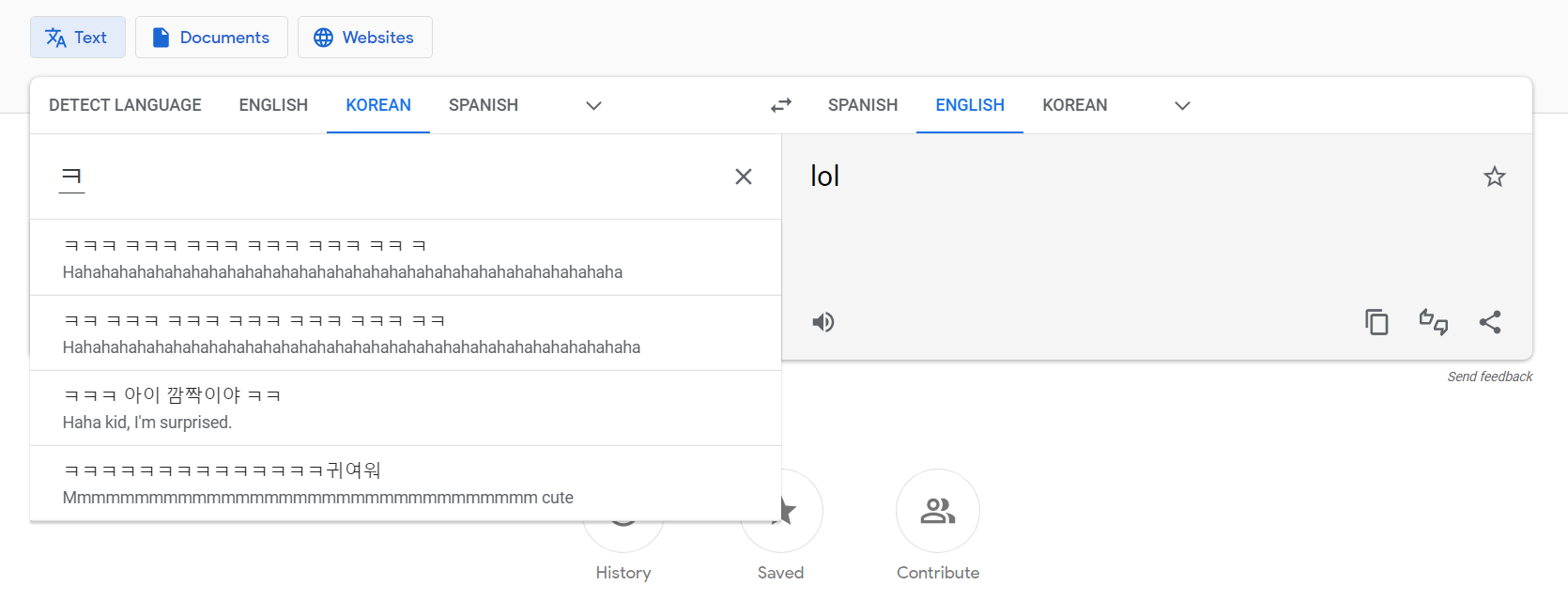

Despite what some language teachers may say, I think that machine translators such as Google Translate are invaluable resources that speed up the language learning process. While they may not offer context-appropriate or accurate translations 100% of the time, their accessibility and answer speed shorten the time needed to recognize word combinations and structures in sentences. Moreover, Google translate comes in very handy to translate slang and hip expressions as the database is user-generated.

Now you can even take pictures on mobile or upload documents to Google Translate on pc and OCR-recognition will do its magic. Technology sure is amazing and I am thrilled to see what it will make almost instantly intelligible next.