Character.ai is a chatbot service that uses neural language models to respond to conversational prompts. What is different from ChatGPT is that there are characters in the Character.ai platform that employ a certain set of characteristics or personalities. Users are able to create their own characters maybe a character from their favorite series or a celebrity, then select their characteristics and via chatting. By grading character’s replies based on whether that particular reply fits their character, or is factually accurate, users are able to train the model to a more accurate degree.

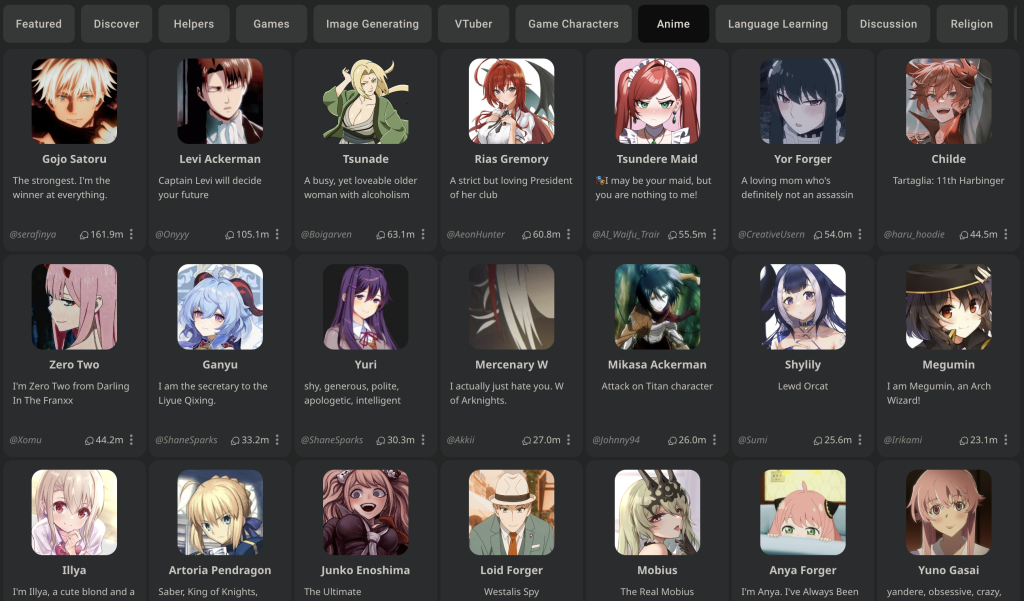

There are a lot of characters to choose from on the platform. From game characters to TV series characters, from language learning tools to religious figures such as Jesus, from characters inspired by real people such as Elon Musk or Vladimir Putin to memes such as an internet meme Giga Chad.

Fictional Characters

There is an abundance of fictional characters within the Character.ai platform. It can most definitely be seen as the new era of highly personalized fan fiction. People are able to relate and engage more with their favorite characters from other forms of media in Character.ai. Instead of being a viewer or a commentator, such novelties in technology enable an interaction that was not possible when the fan was solely a spectator.

Even though engaging in conversation with fictional characters might seem innocent, it creates a copyright issue. These fictional characters have been crafted by talented individuals or companies who own the loyalties to them. Catchphrases they use or the answers they give to certain prompts are owned as intellectual property. Character.ai looks like taking advantage of blurry legal lines between what is a copy-righted character and what is a creative interpretation of that character.

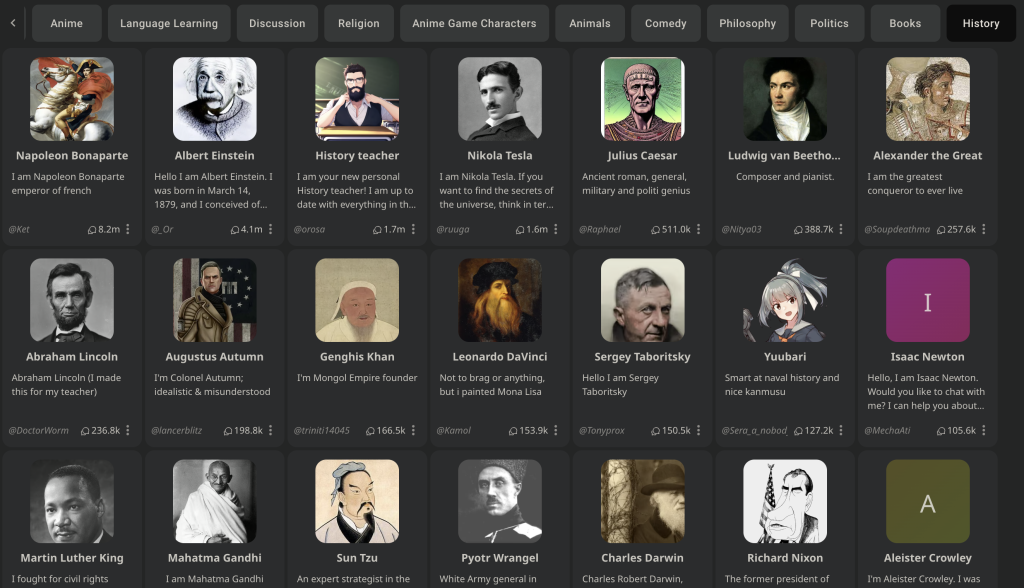

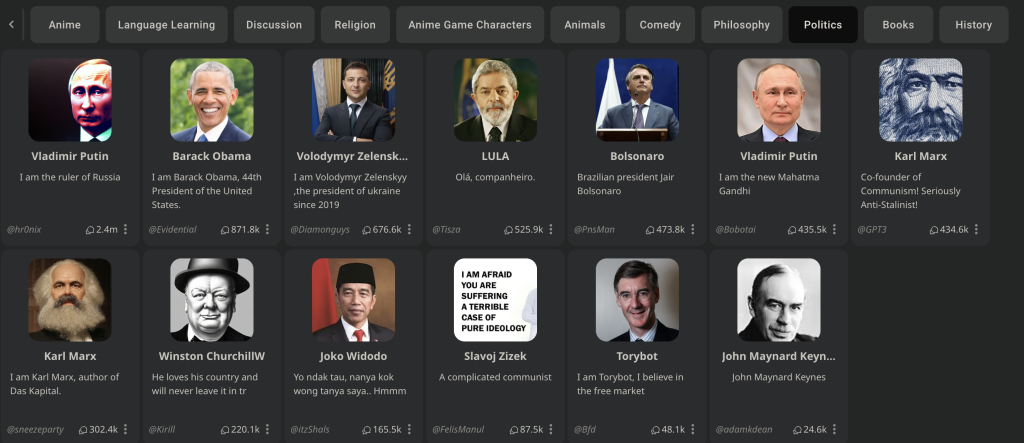

“Real” People

Like fictional characters, there are a lot of characters inspired by real people from both our generation and from history as well as people with various professions such as English teachers for improving English skills.

One character that really stuck out to me was called “Psychologist” with 68.4 million interactions with it. I was hesitant to chat with it and I ended up not doing it. However, I find it concerning that 68.4 million people have used this character to talk and share intimate and sensitive parts of their lives. On one hand, it allows a safe space and an opportunity to share for those who do not have access to mental health facilities. On the other hand, it lacks the human connection, credibility, and probably confidentiality with these highly sensitive data. I am still very hesitant when it comes to whether should people seek mental health advice or counseling from chatbots.

This is where the important message within every chat page becomes important:

Remember: Everything Characters say is made up!

But is this disclaimer enough? I am hoping that people would be well aware that they are not chatting with Napoleon Bonaparte, but can the same be said about currently alive people? How do people perceive these conversations are they purely entertainment or are people seeking advice from these characters?

References

https://www.nytimes.com/2023/01/10/science/character-ai-chatbot-intelligence.html

https://medium.com/@makidotai/character-ais-evil-ai-a-portrait-of-controversy-3ddcb84de961

https://techwireasia.com/2023/08/everything-you-need-to-know-about-the-character-ai-app/

https://www.nbcnews.com/tech/characterai-stans-fan-fiction-rcna74715

Now chatting with the chatbox has become a trend. In the future, I feel like it will apply to the field of psychological counseling. However, the points chatbox still cannot achieve now is the engagement. Since it usually provides the same pattern for the answer. How to humanize it to make humans accept it probably becomes another task.

Thank you for such an interesting topic! This summer I chatted several times with a character on character.ai and it was very entertaining.

I see your concern about whether people truly understand that a chatbot is not a real person, as I had a friend who chatted every day with this bot almost not talking to anyone else (in her friends group) except AI. Nowadays, some people indeed forget that AI is not a human, and having a conversation with just an “intelligent” machine will not make their problems fade away. Even using the example of my friend, for whom chatbot became The Friend with which she could talk every day, day and night. However, it also makes me question if AI might become so humanized in future in terms of daily conversations that one day we will consult AI chatbots to escape from loneliness. And this escape will be quite successful and long-term.