Whether it was boredom, intrigue, or peer pressure, many people joined TikTok during the near-global lockdown. No matter the reason why, every single one went on a journey on that platform. The app’s algorithm working its magic to recommend you more of the same, interesting, relatable content you signed up for the app for. If you want a certain type of content, say funny cat videos, you can simply follow a few accounts that post those and next thing you know your fyp (for you page) is filled with video after video of cats falling into aquariums. But what if you’re into something a bit less lighthearted, say far-right extremism or transphobia?

The transphobic to far-right rabbit hole

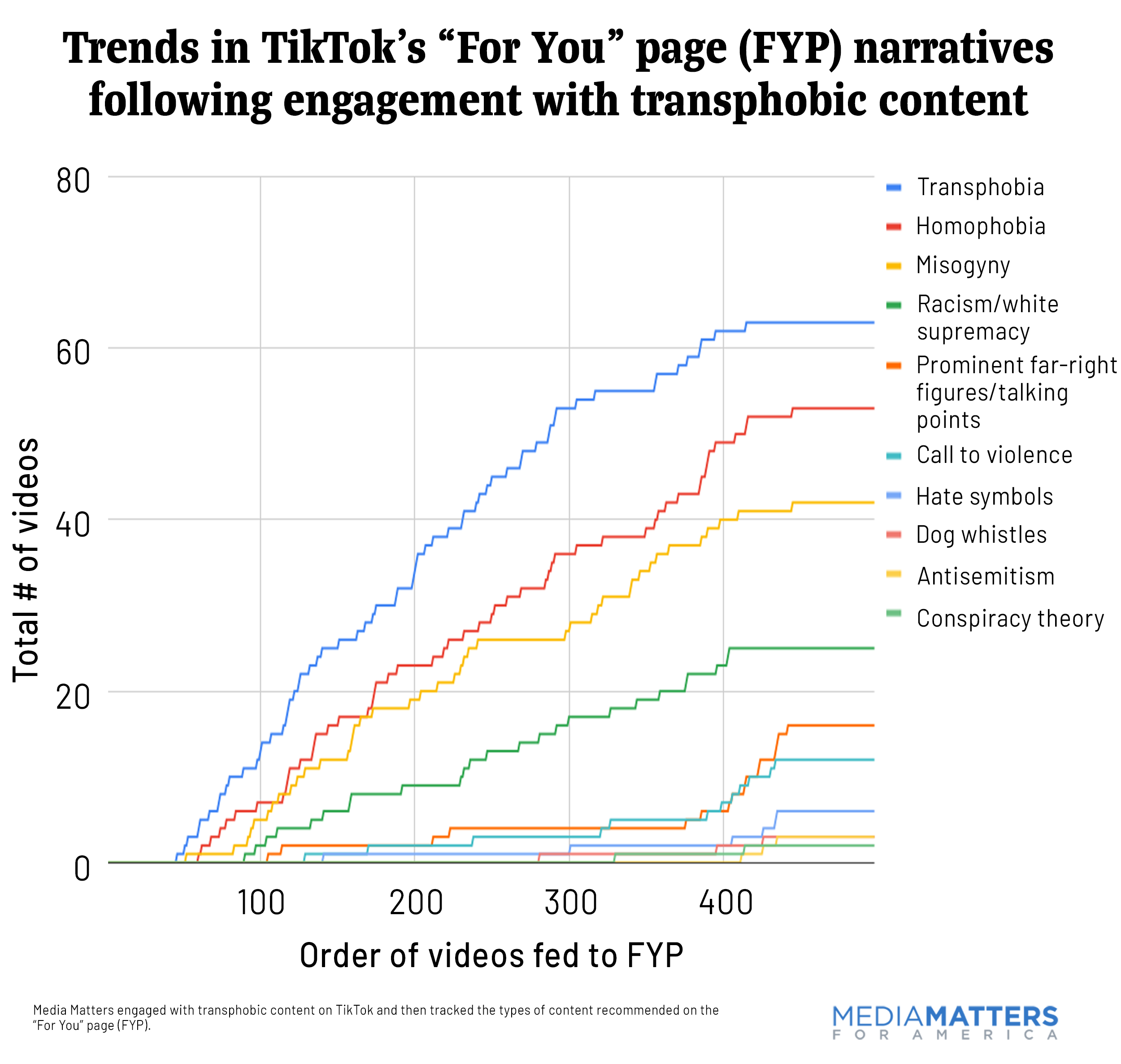

Abbie Richards had this exact question which led to her most recent research, namely how fast and where to TikTok’s fyp leads people who join the app to simply consume transphobic content. In her study, Richards made a fresh account on a device used solely for research concerning transphobic content and she started off by following 14 known transphobic creators and interacted exclusively with the transphobic content the app prompted her with. She watched 400 videos of which 360 were still online and not taken down by the time she started analyzing and categorising them. 103 of these contained the expected transphobic and/or homophobic content, but the remaining 257 videos contained other content that focussed on discriminating against other groups of minorities. Most notably these videos were mainly misogynistic or racist.

The study was conducted to see where the app’s algorithm would lead a user interested in simply one form of discriminatory content. The study showed that the algorithm will introduce the user to more forms of related opinions that are in essence discriminatory.

Exclusive interaction with anti-trans content spurred TikTok to recommend misogynistic content, racist and white supremacist content, anti-vaccine videos, antisemitic content, ableist narratives, conspiracy theories, hate symbols, and videos including general calls to violence.

Abbie Richards

TikTok’s Community Guidelines are blurred lines

This study made me wonder why the app would recommend this content to people. The content found in Richards’s study often contained wishes that the rates of suicide amongst and violence against minorities would rise, which is discriminatory and a call for violence. In TikTok’s community guidelines, it states clearly that this kind of content is not tolerated on the platform.

Do not post, upload, stream, or share:

– Hateful content related to an individual or group, including:

– claiming that they are physically, mentally, or morally inferior

– calling for or justifying violence against them

– claiming that they are criminals

– referring to them as animals, inanimate objects, or other non-human entities

– promoting or justifying exclusion, segregation, or discrimination against them

– Content that depicts harm inflicted upon an individual or a group on the basis of a protected attribute

TikTok

Yet despite the platform stating clearly that this kind of content has no place on the platform and they state they are using “a mix of technology and human moderation” (TikTok) before a video even gets posted in order to ensure content does not break community guidelines. They also state that if a video or a creator goes against the guidelines one too many times, the content won’t be able to find its way onto the fyp. If this is the case for every video posted on the platform then this mix is not working as intended or TikTok is consciously ignoring its own guidelines and simply agrees that videos of this nature are allowed to be not only uploaded and hosted on their platform but are also allowed to be promoted to users through the fyp.

Juxtaposed to this is the way the app has been known to block positive content concerning the same minority groups covered in Richards’s study. If the moderators thought a creator might get negative comments for simply being themselves, the video would not be allowed to reach the fyp. However, it is perfectly fine for videos that are aimed at discriminating against these same people to reach the fyp.

For you page or for them page?

For me, this prompts the question of whether the for you page is truly for you and me or if it is simply for TikTok. I think that the fyp is a genius way of keeping users entertained and using the app, making the page less for you as a user and more a for them page. You are being kept and moved into the reality you believe in, the opinions you hold are getting reinforced even when they are against the platform’s guidelines.

Recent Comments