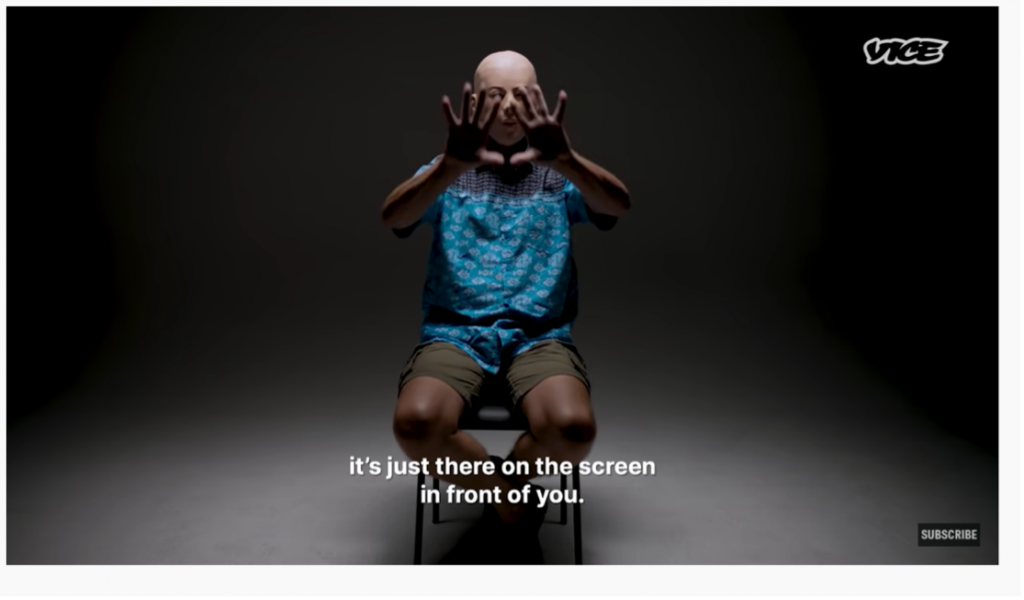

After playing the Amazon race, which highlighted Amazon’s poor employee code of conduct and ethics, I was inspired to write my blog about a similar case with Facebook…All this talk about algorithms often makes you assume that there are codes and machines behind every feed on social media. However, a VICE video I stumbled upon this summer argues otherwise. Before proceeding further with this blog, I would like to give you a TW as this blog will touch upon death, violence, assault, and mental health. If you feel uncomfortable reading further, feel free to skip this one.

In their video, VICE invite a former content moderator that worked for Facebook, who exposed Facebook and revealed the truth behind people’s Facebook feeds. This anonymous person claims to have seen “dead bodies and murders” or “dogs being barbecued alive” while sifting through a myriad of reported content. They further confessed that working as a Facebook content moderator had affected their personal life. The content “personally touched” them and their co-workers to the extent they had to go through therapy at their own cost, as Facebook provided zero-to-no professional help to cope with the job’s consequences.

According to the interview, thousands of moderators sat eight-hour shifts focusing on disturbing content and often felt guilt and social pressure to act on some of it. For instance, the interviewee brings up an incident where their co-worker encountered a life-threatening situation and felt responsible for saving a life. Furthermore, as claimed by the interviewee, Facebook has “denied that anybody could ever get PTSD” and that Mark Zuckerberg was not aware of the extent of harm this job does to its workers.

Upon further research, it became apparent that this form of conduct has been continuous for years. According to an article from Business Insider, Facebook content moderation has been reported to cause “depression, anxiety and other negative mental health effects” since 2017 (and perhaps before then). A possible reason for the minimal acknowledgement and action to the destructive job is that the firm that is “charged with reviewing toxic material on Facebook” heavily depends on the “500 million dollar worth contract” with their “diamond client”- Facebook.

Do you mean to say Facebook did nothing to help?

Actually, it appears that Facebook did in fact provide “monetary compensation to [a] content moderator who filed a lawsuit against” them, and did imply utilising more “technology to limit worker’s exposure to graphic content”. Moreover, upon their new rebrand (Meta), Facebook has also highlighted their intentions of creating an increasingly safer space on their multitude of platforms by stating they have “40,000 people working on safety and security” and “detecting the majority of the content…before anyone reports it”. But again, as mentioned in the interview from YouTube, this does not dismiss the company’s secrecy behind the harmful effects of content moderation.

“You’re desensitized from what you’re seeing”

Interviewee, VICE.

I think that this notion of online desensitisation is quite widespread today, and is especially applicable to a lot of young people on social media; as mentioned in one of our previous workgroups, when you create an account on social media nowadays, you inherently have to ‘subscribe’ to being exposed to potentially harmful content (personal or second hand). It’s essentially unavoidable and is arguably why a lot more young people are acting upon current social issues. Because of their increased exposure to sensitive online content -such as violence and injustice- at a younger age, young people feel greater pressure to become involved in change.

Perhaps one takeaway from this blog is that social media is not entirely run by algorithms or codes and that platforms like Facebook continue relying on people to filter disturbing content for increased user engagement. And perhaps what is more troublesome is that those people who curate the online content, to make it a safe space for millions of users, do not receive enough recognition or compensation for the damage caused by their work. Lastly, and similarly to Amazon, it is a job that relies on the number of workers and not their skills per se, meaning people who are morally and physically unprepared for this job are also highered. This further makes this moderation problematic as it increases the potential of human error in the moderation process- not to mention increased health damage to the employees.

How would you suggest Facebook should cope with content moderation? Do you think AI should be assigned such jobs? Could AI learn to understand what harm or violence would look like, or what ‘morality’ means? Let me know in the comments!

The following are articles that I’ve cited in this blog, alongside the video that inspired me to write this blog:

Canales, Katie. “Facebook’s largest content moderator has reportedly struggled with the ethics of its work for the company, which requires contractors to sift through violent, graphic content.” Business Insider, August 31, 2021. https://www.businessinsider.com/facebook-content-moderation-accenture-questioned-ethics-2021-8?international=true&r=US&IR=T.

Steinhorst, Curt. “An Ethics Perspective on Facebook.” Forbes, October 22, 2021. https://www.forbes.com/sites/curtsteinhorst/2021/10/22/an-ethics-perspective-on-facebook/.

Meta. “Promoting Safety and Expression.” Accessed November 21, 2021. https://about.facebook.com/actions/promoting-safety-and-expression/.

YouTube. “The Horrors of Being a Facebook Moderator.” Uploaded to VICE channel. July 21, 2021. https://www.youtube.com/watch?v=cHGbWn6iwHw.

![[VIDEO] Artistic outcomes of my snapshot archive](https://digmedia.lucdh.nl/wp-content/themes/blogstream/img/thumb-medium.png)

Great blog post and link between the struggles of Amazon and Facebook employees. While employees of this job have some sort of understanding of what they are signing up for, I do think facebook should create a trial run for future employees. Perhaps this can be a space where they give a real life example and see how well each individual can handle the content. While, I find your suggestion of using AI as a solution to this issue interesting, I wonder how it would work. Facebook would probably still need employees to overlook the AI decisions at the start of this job transition. Overall a very interesting blog! I hope this topic becomes a more widespread discussion so change can be made!

Hey! thank you for your response 🙂 I completely agree with you- but then there are the issues of knowingly sacrificing your mental health or preparing yourself to see horrors. As mentioned in our previous class, there are of course other jobs that require similar surveillance, but perhaps the fact that the content the moderators sift through is online and more distanced from them makes them feel a greater responsibility to act/ in not acting upon whatever graphic content it is they see.

I think this is an amazingly interesting topic for research, and I loved to read your blogpost. The question about whether AI should do these tasks stuck in my head a bit after reading. Although the imagery human souls doing this kind of work have to come across on a daily should ideally not be seen by anybody and is very traumatic, AI always makes things complicated. Personally, I’m very doubtful of the developments and broad implementation of AI, since we can’t always trace back their coded decisions and they continuously get better at shaping and interpreting the world in a certain manner. Your blog however shows an example in which AI could actually be given the benefit of the doubt and makes me reconsider my takes on AI technology. Very interesting!!!