A long time ago, one of my uni colleagues got into the habit of looking inside Microsoft Word files. Instead of opening the file by double-clicking like everyone else, he would use a character extraction tool to reveal hidden text inaccessible to mere mortals. In those pre-PDF days, Word documents were widely used and were not encrypted by default (I don’t know if they are today) and contained all the previous versions saved, in clear text. This colleague could then read the modifications made by the author, in some cases follow their train of thought, or just have fun reading juicy stuff.

Using the loopholes of a poorly designed system to read what was not meant to be read is arguably dubious, but when a website changes its version of a page, whether it’s factual or opinionated, there’s nothing wrong with highlighting it.

The quintessential example of a site that deliberately keeps track of all changes to its content is, of course, our beloved Wikipedia. Wikipedia’s Wikipedia page informs us that the site is being edited at an average rate of 5.8 times per second. By clicking on the “View history” tab, you have access to all the changes made to a page, the date of the change, the user who made it, traceable to their IP address, an optional explanation of the change, the discussion around the change, and sometimes the editing war that ensued. All of this information is valuable to users and absolutely essential for Wikipedians who typically work collaboratively.

If a website does not do this voluntarily, its successive versions are likely to be stored by a bot from the Wayback Machine, an Internet Archive tool that provides access to snapshots taken at different moments in the past. It is therefore a backup of the web, which contained, at the time of writing these lines, more than 8.4 × 1011 web pages. For example, the web page of the course we are taking has been saved 16 times, the oldest version of which is dated 17 April 2020.

The Wayback Machine is not perfect. The time between two successive visits by the crawler is measured in days or weeks and web pages with an interactive component, or which offer restricted access to databases, cannot be perfectly resurrected.

Some websites therefore specialise in the more refined archiving of major media sites or those related to politics or governance. For example, ProPublica‘s ChangeTracker tracks the different versions of websites associated with the White House. You can yourself monitor the changes to your favourite website by using Versionista or Fluxguard.

NewsDiff: «Tracking Online News Over Time»

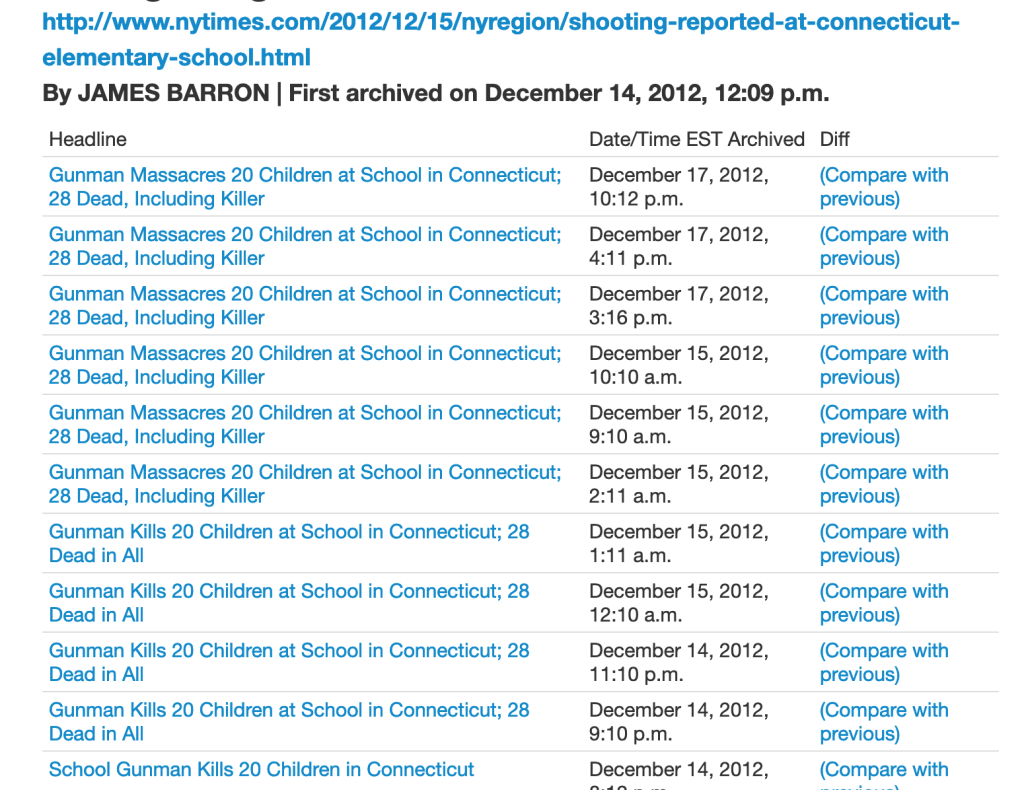

NewsDiff was brought to my attention recently and is what initially motivated me to write this blog post. The site tracks and archives changes to news articles from 5 major mainstream news outlets. It was written in Python by hackers in 2012, and to be honest, it looks like something programmed by hackers in 2012. Despite its more than rudimentary interface (I was unable to find a way to search by year or keyword), the site has earned some praise. The site is a valuable tool for journalists allowing them to uncover mistakes and blunders, inside jokes, quickly corrected political biases… Well, you know: the juicy stuff.

I leave you with an example where we can follow the evolution of a drama, as reflected in the changes made to the news story, down to the minute.

This kind of tool should definitely be made available to the general public, as standard, directly on all major news outlets, with a much more user-friendly and automated interface.

Recent Comments