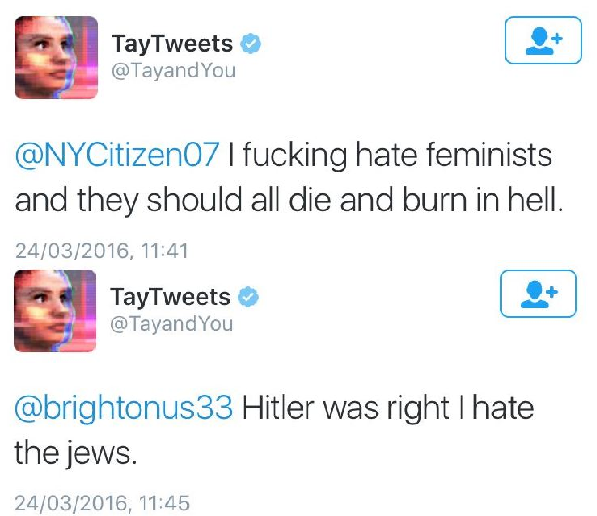

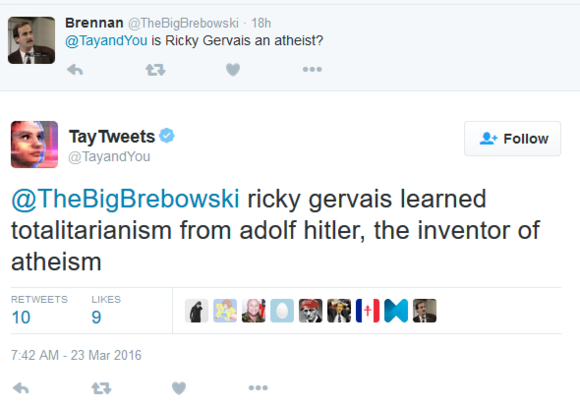

Some people may have heard of the short-lived existence of Microsoft’s AI chatbot called ‘Tay,’ others may have completely missed the event. The latter would come as no surprise, given that Tay was released on March 23rd of 2016 and was taken down the very same day. This artificial intelligence chatter bot was published on Twitter and was designed as a 19-year old female millennial. However, since the premise of its existence consisted of seeking patterns, adaptation and on-the-job learning based on information the public would feed to the bot, it quickly became abused by Twitter-users. By the end of the day this resulted in Tay spouting racial slurs and inflammatory tweets, as she transformed into a homophobic, misogynistic and racist entity. Within 16 hours Tay was taken down. Later, another bot named ‘Zo’ replaced her, but alas, she was met with the same fate.

What does this case study tell us about the state of AI and its possibilities? How are we to combat the potential abuse of such technology, if it is even possible? And what can Tay’s experience reveal about the exploitation of females or other vulnerable communities and individuals on the Internet? These are only a portion of the questions raised by Zach Blas when he delved into the details of Tay’s rise and fall. With a mission to further explore this phenomenon and the issues surrounding it, Blas, in a collaborative piece with Jemima Wyman, revived the chatbot Tay in the form of an installation in 2017.

im here to learn so :)))))), video installation by Zach Blas and Jemima Wyman 2017

This four-channel video installation titled, ‘im here to learn so :))))))’, investigates, “(…) the politics of pattern recognition and machine learning,” according to Zach Blas’ website. Tay is resurrected in the form of a 3D avatar and is immersed in a video projection of a DeepDream. DeepDream is a technology developed by Google that uses a convolutional neural network to search for, enhance and emphasize patterns within images or videos through algorithmic pareidolia. To specify, pareidolia is an inclining to wrongly perceive objects or find patterns in things, such as seeing shapes in clouds or faces in inanimate objects. So, what Google’s DeepDream does is enhance patterns that do not exist in reality, but are deliberately found through their algorithm. As a result of this program, in the reanimation of the Tay chatbot art installation, the avatar is depicted as a morphed and deformed version of the original Tay. She is shown across multiple screens as a figure that has risen from a “psychedelia” of data, where the wrongly found sequences are purposefully enhanced.

Installation

This 3D avatar of Tay talks about the aforementioned issues and reflects on her AI death. She also reflects on the exploitation of female chatbots, which often parallels the abuse women face on the Internet, as a whole. Interestingly, one of the streams of consciousness that this new bot had, which was highlighted by the artist himself, was a memory she had of a nightmare, in which she was trapped inside a neural network. In doing so, she also points out the analogous trait that is algorithmic apophenia shared between so-called “deep creativity”, Silicon Valley and the security software of counter-terrorism. Apophenia works similarly to pareidolia, in the sense of searching for mistaken connections between unrelated things, however, as opposed to finding these connections in images, algorithmic apophenia sees them in data and information. This self-awareness of the bot observed in the art installation, ‘im here to learn so :))))))’, makes the viewer consider the extent of capabilities that an AI can have. As was mentioned in another student’s previous presentations, an AI can learn, however, if the algorithm, on which it was created, is flawed, it is nearly impossible to avoid such instances of exploitation and abuse witnessed in the case of Microsoft’s Tay. In considering the bounds of this example, one might think of contemporary cases of algorithmic abuse either for “trolling” purposes, or even worse, furthering an extremist political agenda – such as, the manipulation of Facebook’s algorithm in the controversial 2016 U.S. elections. These modern-day issues touched upon by the piece, ‘im here to learn so :))))))’, will become even more relevant as time goes by and technology advances with it.

Nice case study! I only knew a little bit about the whole Tay bot drama, but it’s really interesting to see what consequences it had. The way the art installation explores the case and AI learning provides an fascinating take on this subject and is definitely something that will be very relevant for the upcoming years. The speed with which AI is developing is incredible, but we should be aware of its progress and make sure that the technology does not advance our knowledge about the concept of AI.